Twenty years after Daniel Kahneman received the Nobel Prize in economics for applying behavioral psychology to economic decision making, the Princeton researcher’s work on dual-process theory continues to provide insight into the factors that shape our thinking when it comes to investing.

Over the past few decades, psychologists, economists and other behavioral scientists have explored some of the rules that appear to govern decision making and — with a particular focus on investors — decision making under risk. Three Nobel Prizes have been awarded for research on decision making in the past quarter century alone, including Gary Becker’s in 1992 and Richard Thaler’s in 2017; both economists hailed from the University of Chicago. The research, usually considered under the rubric of behavioral finance or behavioral economics, broadly suggests ways to produce better outcomes in both wealth creation and the fostering of a sense of well-being.

Our daily decisions are guided by the need to achieve and maintain a general state of well-being. This sense of well-being is based on multiple considerations, which can vary from culture to culture but generally involve a combination of social, emotional and economic factors. To achieve well-being, we constantly make choices and decisions that we believe will get us closer to this goal.

But if we account for systematic factors (government policies, available resources, the state of the economy and so on), which are generally out of anyone’s control, what factors specific to individuals play roles in establishing a sense of well-being? This consideration is particularly relevant in the field of investing, where the behavior of the markets can be volatile and sometimes chaotic, and the input provided by changing prices can rapidly perturb a sense of equilibrium.

One of the most important insights of this research on decision making is known as dual-process theory. Princeton University behavioral psychologist Daniel Kahneman, a colleague of Thaler’s and the 2002 winner of the Nobel in economics, laid out the underlying thesis in his 2011 book, Thinking, Fast and Slow. Kahneman argued that as part of our biological heritage, our brains are split into two parts — two minds. These separate minds employ very different styles of decision making: one that is fast, effortless and based on intuition and emotion, and one that requires effort and is deliberate and analytical. Kahneman calls them System 1 and System 2.

Anatomically, the human brain cannot be divided into two discrete systems. Although some areas such as the prefrontal cortex may be specialized physiologically in the context of one system (in this case the analytical System 2), in general these two systems commingle in the various localized portions of the brain and are not entirely separate regions. Nor are they completely independent, as the generalized process of learning ties the two systems together.

Adding Value to Perception

To understand how these systems shape decision making and well-being, consider homeowners who want to sell their houses. When valuing a house, a homeowner will generally seek an above-market price in the area. A buyer, however, will tend to value a house at the average market price. Because sellers and buyers initially do not attach the same value to a house, they usually only arrive at a price acceptable to both sides after negotiation. From the homeowners’ perspective, is this a question of maximizing profit, or is something else involved? Kahneman contends that there is an attachment bias at work: People value goods they own more than those they don’t. System 1 adds value as an extra, emotional consideration for the home versus the average house.

Investing is clearly shaped by this mental processing dynamic.

System 1’s intuition directly assigns emotional value to perception. Perception is the mental activity involved in interpreting incoming sensory data. Examples include stereo vision, object segmentation and recognition, and smell, color and language cognition. Perception generally changes as System 1 responds to change. System 1 converts sensory input into cognition, which occurs as the brain’s network of neurons provides learned responses to incoming stimuli. System 1 creates the world we effortlessly experience.

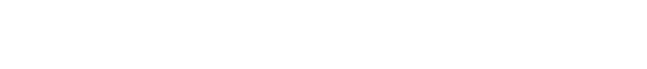

System 2 is the more rational of the two minds. It can apply rules to new challenges, such as riding a bike, playing an instrument or solving a problem. In investing it makes decisions through analysis. To use System 2 you have to concentrate, which requires effort and energy. (See Table 1 for a comparison of the attributes of Systems 1 and 2.)

Typically, System 1 can take over calculations by training its neural network through repetitive attempts; this is called learning. The system acts as a kind of table of data in which the results of complex calculations are stored. If the answer exists, it can be used immediately. Otherwise, System 1 makes a quick guess based on averaging the answers to similar queries in the past.

Generally, in decision making, System 1 provides a first opinion. System 2 then generates a light review of that opinion. If the opinion generated by System 1 appears irrational, System 2 overrides it — though that’s more the exception than the rule. Note that System 1 is always on and applies itself to perceived data whether you realize it or not, and therefore it dominates decision making in familiar and repetitive settings.

Active investment management involves multiple repetitive transactions, in which System 1 plays a powerful role. To show how some of this logic works, let’s begin with a simple example. Consider the following two sets of transactions that record the results, in terms of heads (H) or tails (T), of a series of coin tosses HTTHTH or HHHTTT. Which results are more likely?

Most people select the first as more likely. System 1 visualizes each sequence and discerns patterns. It then matches those patterns to its notion of what the average random sequence of coin flips would look like; for most people it would resemble the first case. The second series has an unusual and noticeable transition pattern, and therefore looks less likely. Therefore, System 1 selects the first case as the more likely sequence.

Coin flips are independent of one another, however, and the chance of three heads and three tails resulting from six tries is independent of the order in which heads and tails appear. The difference is that we notice the transition from all heads to all tails because the key property of perception is the detection of changes. The perception of the stronger pattern leads us to conclude that the second series is rarer than the average.

One implication of this perception of change is that in viewing number sequences, financial time series or stock charts, we grow overconfident when we are faced with clearly identifiable patterns based on small amounts of data. This anomaly is known as the law of small numbers.

The Importance of Framing

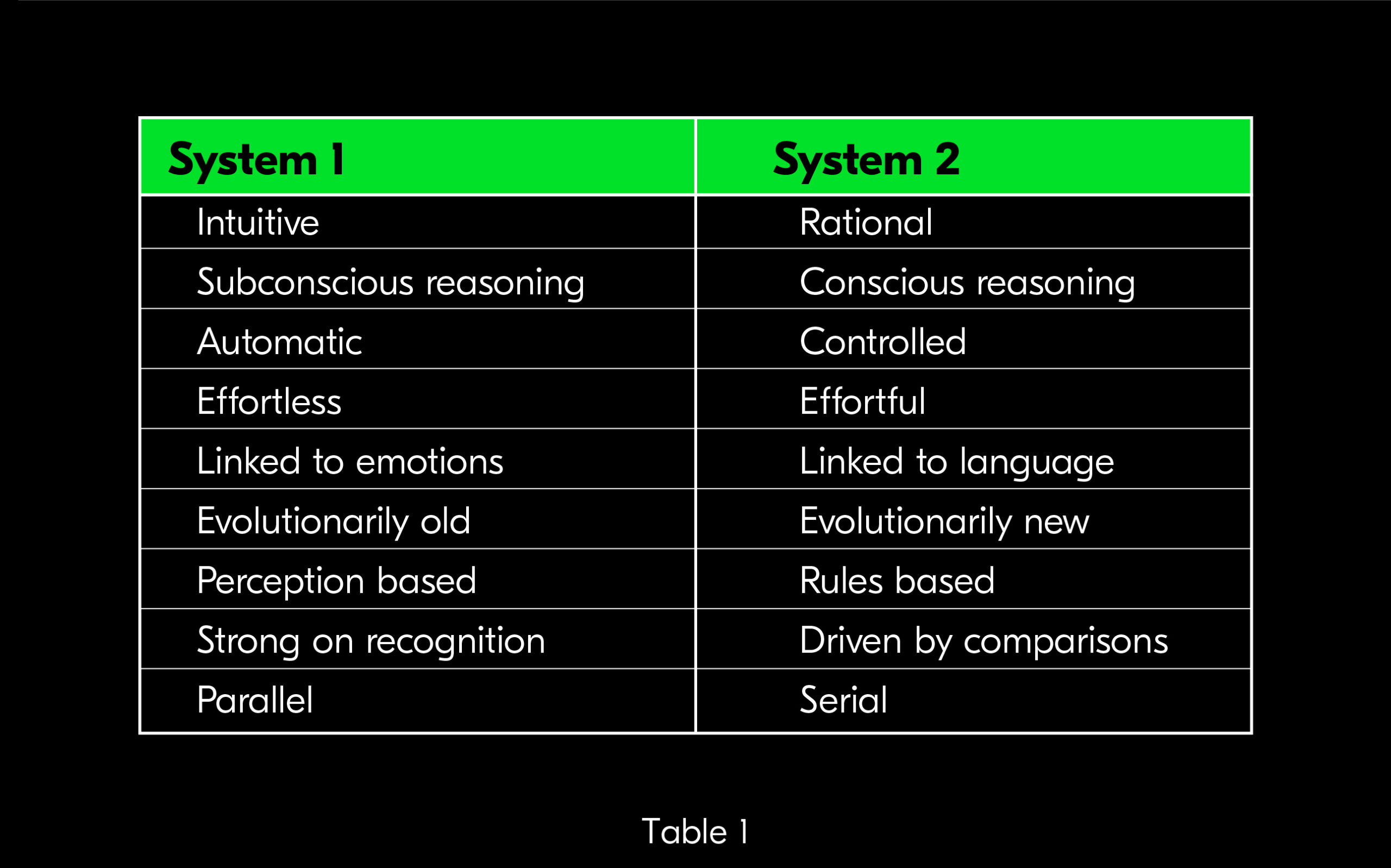

Let’s examine another aspect of perception to show how change detection is a universal feature of our processing of sensory information. Note the two boxes in Figure 1, taken from Kahneman’s Nobel lecture:

The inner square on the left appears brighter than the inner square on the right, but that’s because its brightness is relative to the luminosity of the outer square. The eye’s retina converts light and is mainly sensitive to edges and the edges of edges. This is the information the mind receives, so the absolute brightness of the inner squares is actually a mental reconstruction built by System 1. The two inner gray squares are, in fact, identical.

Decision making is no different from any other mental process, and System 1 primarily perceives changes of state. In investing, the change of state is often expressed as a gain or a loss. This is a very important point because what we perceive directly affects decisions we make.

This phenomenon — generally known as framing — can be explored using two similar hypothetical questions.

Which of the following would you prefer?

A. A 25 percent chance to win $1,000 and a 75 percent chance to win nothing

B. A certain $240

Most individuals opt for the sure bet of B, even though the expected value of A is $10 higher. In this context people take the guaranteed gain. Now let’s view this situation from a different point of view.

Which of the following would you prefer?

A. A 25 percent chance of losing nothing and a 75 percent chance of losing $1,000

B. A sure loss of $760

In this case a large majority of individuals express a preference for A — taking a risk to avoid the sure loss. This is called loss aversion.

However, the choices presented in the two problems are actually identical. Say you subtract $1,000 from both options A and B in the first problem. The 25 percent chance would result in a zero gain (or loss), and the 75 percent chance would produce a $1,000 loss, both identical to option A of the second problem. The same applies to option B: The sure gain of $240 in the first problem becomes a sure loss of $760. The second problem is a rephrasing of the first with a (choice-independent) subtraction of $1,000. All that’s really changed affecting choice is the phrasing of the question, from gains to losses, with the first case highlighting gains and the second highlighting losses.

So what exactly is happening here? Rephrasing the wording of the problem causes a change of frame. The framing is like the shading of the larger squares in the boxes above. It influences choice; the selection of A versus B resembles our perception of the brightness of the smaller squares. Our decision making is always framed because the frame represents the context and information available when we are thinking about how to answer the question. A change in framing can clearly affect how we value outcomes.

The Power of Losses

The basic understanding of how System 1 values outcomes in investing is known as prospect theory. The underlying theory can be broken up into tendencies: 1) We perceive mainly gains and losses; 2) we perceive losses more than gains; and 3) we perceive the change from impossible to possible. Kahneman and his longtime collaborator Amos Tversky first elucidated these tendencies. Unfortunately, the Stanford University–based Tversky died in 1996, six years before Kahneman won the Nobel for the pair’s joint discovery of prospect theory. (Nobel Prizes are never rewarded posthumously.)

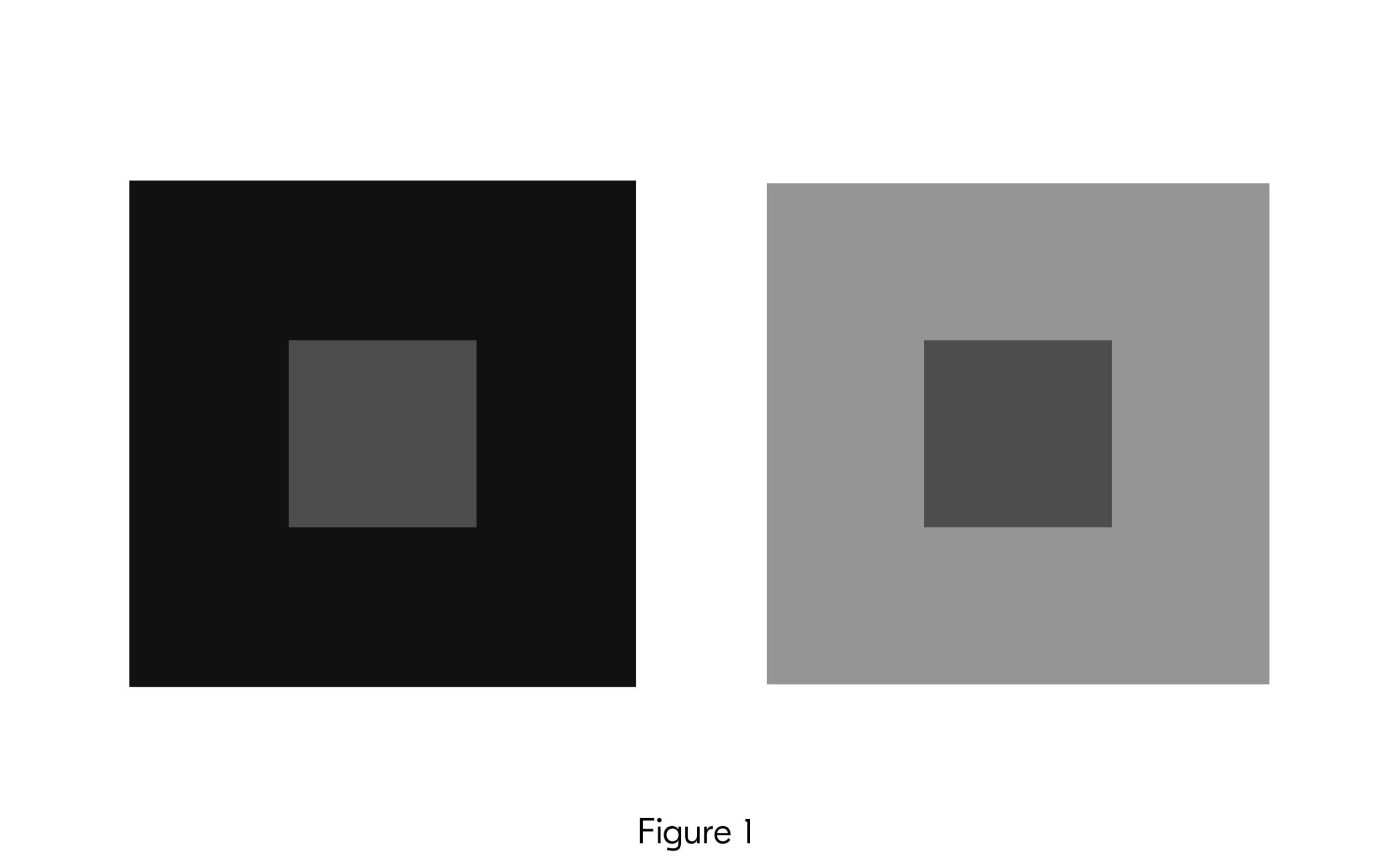

Prospect theory is encapsulated in Figure 3, in which the x-axis denotes gains and losses as units of perception and the slope of the curve representing losses is greater than that for gains.

This difference of value for gains and losses can be explored by considering a simple question: You are offered a bet on the toss of a fair coin. If you lose, you must pay $1,000. What is the minimal amount that you need to win to make this bet attractive?

Most people interpret this as a one-time event with a potential loss of $1,000 and weight the loss of $1,000 against a single potential gain. The average human response, as indicated in the ratio of the slopes above, is to balance the chance of losing $1,000 against the possibility of winning $2,000.

Though not shown on the graph, the change from impossible to possible is highly perceptible. This contributes to the popularity of gambling, in which small chances of winning a large jackpot are given so much value. This last feature gives prospect theory its name.

Prospect theory helps us to understand, in part, the role intuition plays in financial decision making. It represents a model of the mind’s cognition of sensory inputs when making decisions. Prospect theory attempts to match an internal feeling of value when contemplating portfolio changes. This constitutes the sense of investing.

Prospect theory is an investing paradigm that replaces expected utility theory, which was introduced by Swiss mathematician and physicist Daniel Bernoulli in 1738. In Bernoulli’s theory of value, the expected utility is calculated using probabilities; risk aversion is represented by the value curve for a given total of net wealth. The key to understanding this formulation is that the input data includes total net wealth. But just as our visual system is not able to perceive absolute shade and brightness of color, our investing sense does not easily perceive net wealth. We are not able to easily execute Bernoulli’s approach because our senses, which are built on neural networks, struggle to perceive steady-state information.

Fortunately, to help our decision-making process, proper framing allows us to introduce and perceive wealth. As an illustration, consider this question:

Which of the following would you prefer, given wealth W?

A. A 25 percent chance to win W plus $1,000 and a 75 percent chance to win only W

B. W + $240

This resembles the earlier question but adds the variable W to the frame. In this case, for many people W triggers the System 2 calculation of 25 percent times $1,000, which is greater than $240. They would then select option A instead of B. Rather than evaluating the efficacy of either option, there is a tendency to utilize only the aspects of the problem that are known. People focus on the known quantities given to them, rather than evaluating that though W is not a known variable, it has the possibility to yield a greater monetary outcome and could be a better choice (if the risk level is acceptable to the person).

This may explain why people like Warren Buffett are so deliberate and rational in their decision making: The explicit perception of net wealth and other difficult-to-perceive factors through analysis allows them to reduce perception-based errors in making investment decisions.

Dissonance, Biases and Anchoring

Because System 1 operates as a neural network, it is difficult to reprogram. In particular, it is very hard to unlearn behavior; significant effort is required to do so. Therefore, the System 1 mind discourages it. In the context of decision making, this means people generally feel extremely uncomfortable when presented with evidence that contradicts their own views, a phenomenon known as cognitive dissonance. They instead seek information that confirms their views and therefore “improves” System 1, a tendency known as confirmation bias. System 1 decisions are always based on perception, hence on available (and not necessarily valid) information, a phenomenon known as availability bias.

This process of establishing, maintaining and modifying our views and beliefs has been dubbed anchoring: We get attached to some original information (the anchor) and have a hard time reasoning without it as a starting point, even when the anchor is completely meaningless or arbitrary. Retailers have long understood this and use it successfully in presenting prices. This is why an “undiscounted” price is nearly always shown next to the actual price.

Apart from the inability of System 1 to function without an anchor (and to estimate averages based on that anchor), a trait specific to humans plays a major role in decision-making errors. Biologists now say that humans’ ability to create narratives is an essential part of what makes us Homo sapiens. Roughly 70,000 years ago our species underwent a cognitive revolution that allowed humans to create art and culture and to imagine a past known as history, and a future. As a species, we became capable of speaking of advanced collective fictions. These are not necessarily fictions in the sense of invented stories or falsehoods, but abstract concepts that don’t exist outside of human storytelling, such as nations, laws and currencies. We can even place value on these objects as if they exist in the physical world. This is a primary difference between Homo sapiens and other mammals (including our immediate ancestors, Homo habilis and Homo erectus, which we currently believe lacked this ability), and it has led to our dominance on earth. However, with this ability we frequently confuse coincidence with causality, and this can lead to the illusion of knowledge.

One obvious example of this phenomenon happens at the end of the trading day, when the news media never fail to assign narratives to stock market gains and losses. Though much of the daily market behavior is random, there is always a postmarket story explaining why prices closed up or down. Sometimes the headline and story don’t come close to describing what’s driving the market, particularly when they are written before the last-minute price changes at the close.

Given these behaviors, you might suspect that System 1–based discretionary trading should not be able to outperform a simple System 2–based market allocation benchmark, such as weight by market capitalization for the S&P 500 index. This is indeed the case, as most market investment professionals consistently underperform their benchmarks. It is therefore reasonable to suspect that the application of System 1 to financial decision making is far more prevalent than that of System 2. And therein lies the problem.

To produce better outcomes for well-being and wealth creation, we must be aware of the workings of our minds. We should endeavor to frame important financial decisions carefully, using valid, quantifiable data to introduce long-term wealth and well-being into the process, and to reduce the weight of highly perceptible short-term events. Although two minds working together can be supremely beneficial, we should be aware of their limitations. We mainly perceive changes and have difficulty estimating real overall value. We have a hard time unlearning and a propensity to seek reinforcing information. Prior opinions and beliefs are difficult to modify, sometimes even in the face of overwhelming evidence. We make choices depending on how questions are framed. Losses are perceived more acutely than gains. And we create narratives out of almost any perceptible changes of state, potentially lending a rational explanation to random events.

For further information on dual-process theory, interested readers might start with Kahneman’s Thinking, Fast and Slow and Michael Lewis’s biography of Tversky and Kahneman, The Undoing Project. Kahneman’s Nobel lecture and the compendium of extended prospect theory research topics are more technical but quite insightful. System 1 often imperfectly applies heuristics or rules to problems, and that misapplication may not be particularly noticeable. This occurs frequently in economic situations such as the pricing of goods via sales. In the behavioral psychology literature, unexpected decisions are often explained as cognitive biases; in the behavioral finance literature, they are viewed as the foundations of many market anomalies. In the larger context extended prospect theory is now accepted as one of the foundations of behavioral economics, which considers economic decisions and their consequences.

We are at only the start of this deeper exploration of the brain and how it shapes human decision-making activities like investing. More Kahnemans and Thalers (and more Nobel prizes) will surely follow.

Paul Griffin is Chief Science Officer of WorldQuant and leads the firm’s quantitative research program. He has a PhD from Stanford University and BA from the University of California, Berkeley, both in physics.